SyncRef: Fast & Scalable Way to Find Synchronized Time Series

In CVPR’20 presented a new method (with code) for finding the largest subset of synchronized time series from a given set of time series. Specifically, we aim to find the largest subset of time series such that all pairs of in the subset are correlated at least by a (given) threshold value $\rho$. This is an NP-hard problem and the exact solution is, in general, unfeasible. We propose a new method, called SyncRef, for finding an approximate solution in an efficient…

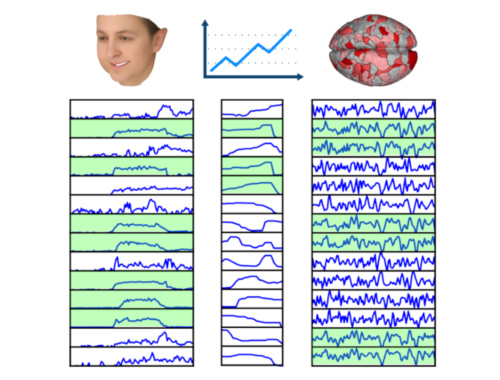

Is Pose & Expression Separable with WP Camera?

This study on facial analysis pipelines shows the limitations of using a Weak Perspective (WP) camera when it comes to decoupling pose and expression from face videos. That is, when decoupling of facial pose and expression within images requires a camera model for 3D-to-2D mapping when done through 3D reconstruction. The weak perspective (WP) camera has been the most popular choice; it is the default, if not the only option, in state-of-the-art facial analysis methods and software. WP camera is justified…

Can I swap one matrix norm with another?

Some matrix norms are significantly costlier than others. For example, the matrix-2 norm requires computation of eigenvalues, whereas the matrix-1 norm is simply computed by finding the column of the matrix with the largest (absolute) sum (p283 of Carl D. Meyer). Thus, the following question becomes relevant: Can we use some matrix norm in place of some other norm? Fortunately, for some applications, we can do this. First of all, for analyzing limiting behavior, it makes no difference whether we use…

Does it really take 10¹⁴¹ years to compute the determinant of a 100×100 matrix?

Well, it depends on how you compute it. If you compute it by using the definition of determinant directly, in fact it can take more than 10¹⁴¹ years, as we’ll see.${}^\dagger$ Let’s recall the definition first. The determinant of a matrix $\mathbf A$ is $$\text{det}(\mathbf A) = \sum\limits_p \sigma(p) a_{1p_1} a_{2p_2} \dots a_{np_n},$$ where the sum is taken over the $n!$ permutations $p=(p_1,p_2,\dots,p_n)$ of the numbers $1,2,\dots,n$. The total number of multiplications in this definition are $n! (n-1)$. Based on my…

Reflectors should be your second nature

Reflectors are a class of matrices that are not introduced in all linear algebra textbooks. However, the book of Carl D. Meyer uses this class of matrices heavily for various fundamental results. Indeed, the book uses reflectors for theoretical reasons, such as proving the existence of fundamental transformations like SVD, QR, Triangulation, or Hessenberg decompositions (more to come below), as well as applications, such as the implementation of the QR algorithm via the Householder transformation or solution of large-scale linear systems…

What is the point of Cauchy-Schwarz, Minkowski and Hölder inequalities?

These three inequalities often tend to appear as a package in many textbooks about real analysis, signal processing or linear algebra. It is good to know the main reason that we see this package all the time, and to separate the role of each of these three fundamental inequalities. The overall reason is that, these inequalities are the key to generalize the facts about vectors in 2D/3D spaces and the Euclidean norm to higher dimensional spaces and (non-Euclidean) $p$-norms. Each of…

On the importance of the Cauchy-Bunyakovskii-Schwarz Inequality

The triangle inequality $$||x+y||\leq ||x|| + ||y||$$ is possibly the earliest inequality that we learn. Starting from high school, we learn that the shortest distance between two points is a single straight line, and if we want to travel the same distance by two or more straight lines, we will travel longer. We know very well that the triangle inequality that we so intuitively get is not limited only to the 2D or 3D spaces that we can visualize, but to…

How to compute basis for the range, nullspace etc. of a matrix? 6 Approaches

The four fundamental spaces of a matrix $A$, namely the range and the nullspace of itself $A$ or its transpose $A^T$, are the heart of linear algebra. We often find ourselves in need of computing a basis for the range or the nullspace of a matrix, for theoretical or applicational purposes. There are many ways of computing a basis for the range or nullspace for $A$ or $A^T$. Some are better for application, either due to their robustness against floating point…

EBT-III matrices should be a key tool in your inventory

By reading this post you’ll be able to comprehend the basic mechanism behind the proof of LU decomposition Schur Inversion Lemma The Sherman-Morrison-Woodbury inversion formula Small perturbations can’t reduce rank (p216-217 of Meyer) Rank of a matrix equals the rank of its largest nonsingular submatrix (p214 of Meyer; see also Exercise 4.5.15) Characteristic polynomial of $AB$ and $BA$ is the same for square $A,B$ (see Exercise 7.1.19, 6.2.16 and eq. (6.2.1) of Meyer) And many more theorems and lemmas (a few…

Can we really say nothing about the inverse of A+B?

Unfortunately a general formula that simplifies the calculations for a matrix inversion $(A+B)^{-1}$ with arbitrary $A$ and $B$ does not exist. If one of the matrices corresponds to a low-rank update (e.g., $B=CD^T$ for some $C, D\in\mathbb{R}^{n\times k}$ with $k<n$), one can use the S.M.W. formula to great effect. However, in other situations, this formula would not simplify calculations. But all hope is not lost yet. There is another case where $(A+B)^{-1}$ can be computed relatively efficiently. To understand how and…