On the Attention Mechanism

What is revolutionary about the attention mechanism is that it allows to dynamically change the weight of a piece of information. This is not possible with, say, traditional RNNs or LSTMs. Attention mechanisms were proposed for sequence-to-sequence translation, where they made a significant difference by allowing networks to identify which words are more relevant with the $t$th word in the translation (i.e., soft alignment), and putting more weight to them even if they are far apart from the word that is…

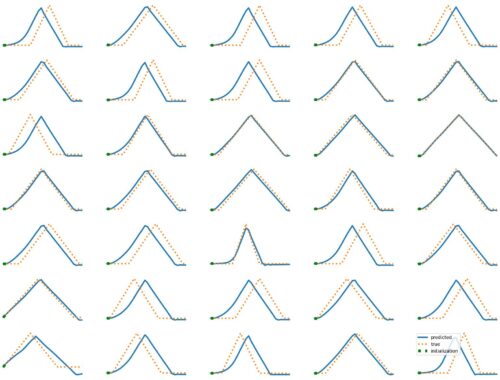

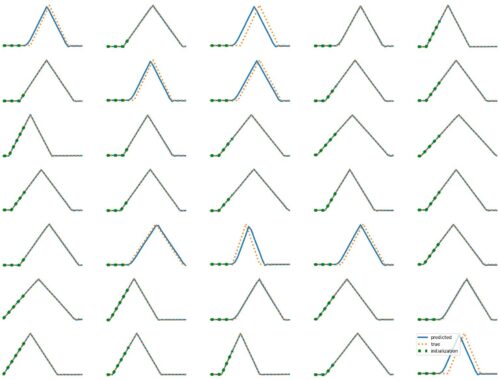

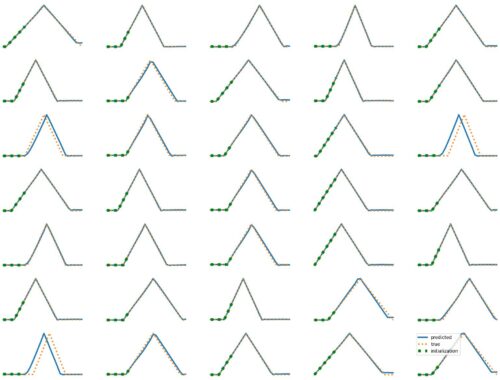

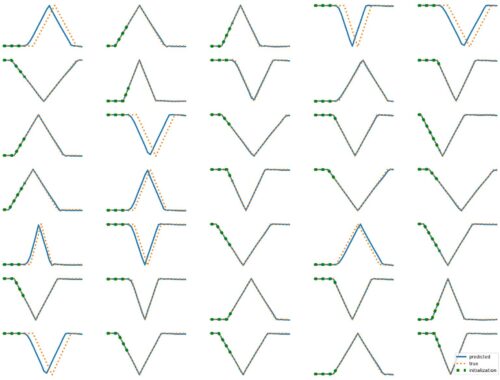

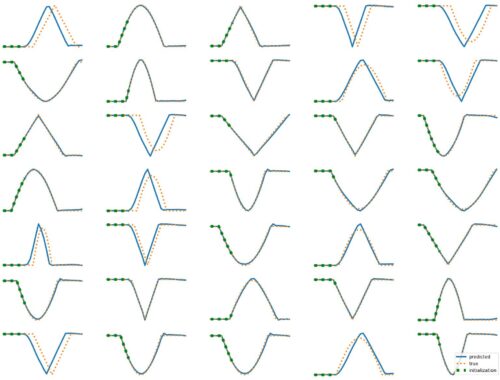

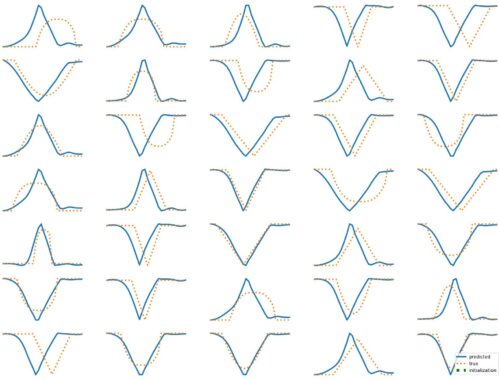

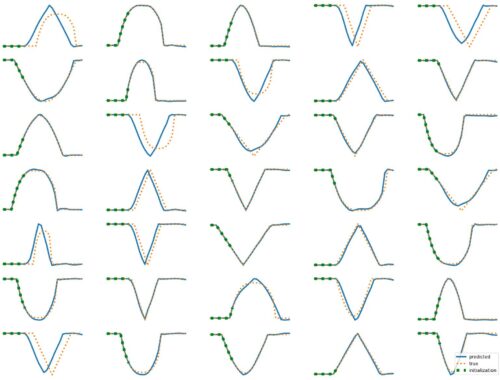

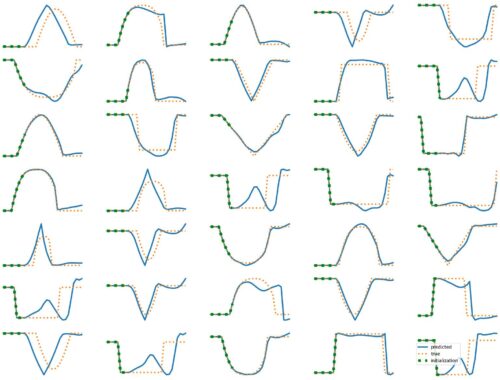

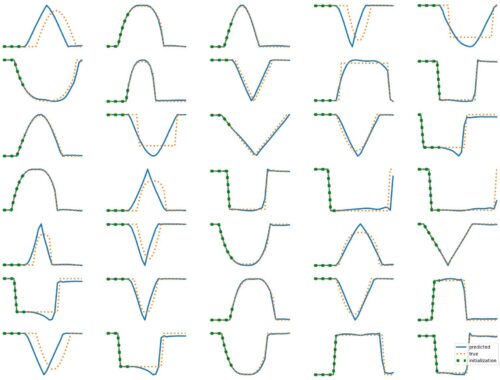

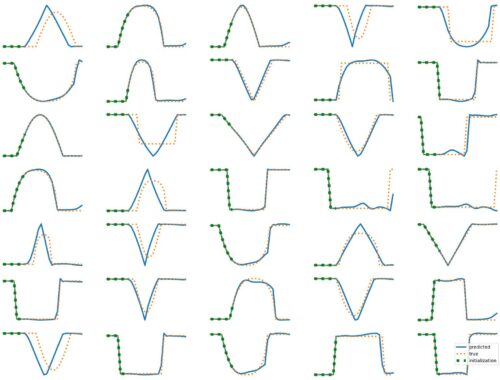

LSTM Examples #1: Basic Time Series Prediction

The results above illustrate how LSTM works with continuous input/output on simple 1D time series prediction tasks. We aim to estimate simple shapes by using past data. We follow an increasingly more complex scenarios. Results show the limitations of continuous time-series prediction via LSTM; the last slides show that even simple shapes cannot be accurately predicted. But the code is helpful to illustrate the basics of time-series prediction. Below we provide all the commands and the python script needed to generate…

Self-supervision on Deep Nets

Arguably, the main reason that deep nets became so powerful is self-supervision. In many domains, from image, to text, to DNA analysis, the concept of self-supervision was sufficient to generate practically infinite “labelled” data for training deep models. The idea is simple yet extremely powerful: just hide some parts of the (unlabelled) data and turn the hidden parts into the labels to predict. Here are some notes (mostly to myself) about self-supervision. There are two standard ways to make self supervision:…

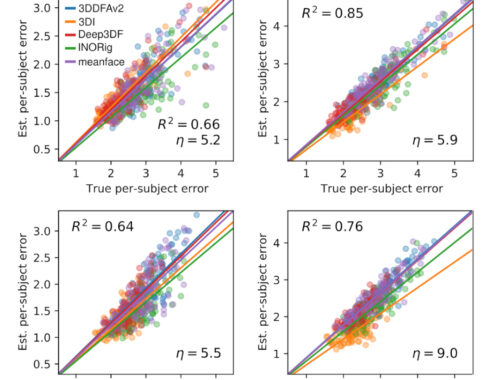

State of the art in 3D face reconstruction may be wrong

Our IJCB’23 study exposed a significant problem with the benchmark procedures of 3D face reconstruction — something that should make any researcher in the field worry. That is, we showed that the standard metric for evaluating 3D face reconstruction methods, namely geometric error via Chamfer (i.e., nearest-neighbor) correspondence, is highly problematic. Results showed that the Chamfer error does not only significantly underestimate the true error, but it does so inconsistently across reconstruction methods, thus the ranking between methods can be artificially…