State of the art in 3D face reconstruction may be wrong

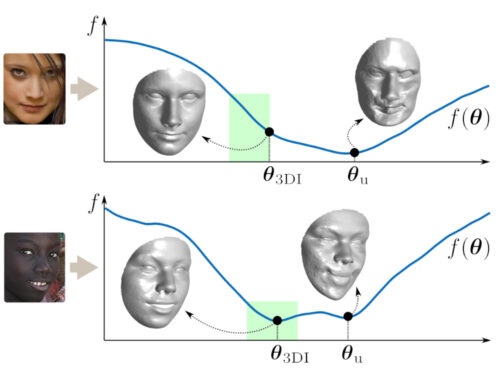

Our IJCB’23 study exposed a significant problem with the benchmark procedures of 3D face reconstruction — something that should make any researcher in the field worry. That is, we showed that the standard metric for evaluating 3D face reconstruction methods, namely geometric error via Chamfer (i.e., nearest-neighbor) correspondence, is highly problematic. Results showed that the Chamfer error does not only significantly underestimate the true error, but it does so inconsistently across reconstruction methods, thus the ranking between methods can be artificially altered. As such, it is difficult to claim that a method is superior to another based on a relatively small difference in Chamfer error.

Also, taking the liberty of this being my personal blog space, I want to share an even more concerning consequence even though I don’t have data for that (and it is difficult to obtain data for that). Given that pretty much all recent 3D reconstruction methods are based on deep learning, it is possible to “hack” the Chamfer metric—to learn a network that minimizes Chamfer error without necessarily reducing the true error. In any case, it is not unreasonable to say that Chamfer error cannot be the sole authority for a method’s superiority.

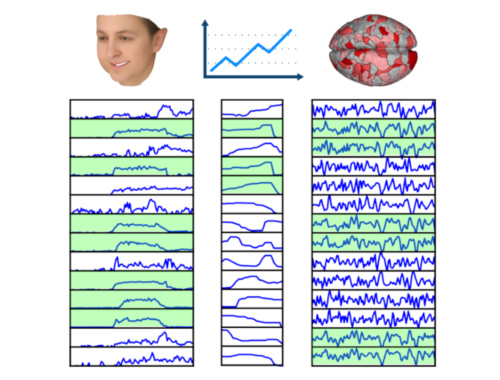

Our IJCB’23 presented a meta-evaluation framework for evaluating a geometric error estimator. Given that the Chamfer approach is subpar, we believe that it’s important to have criteria for determining which geometric error estimators are good.

You can read more about the study in the paper (and poster) below: